How to Analyze Slack Conversations with Mantium

In this tutorial, you will learn how to analyze Slack conversations using Mantium's platform. By the end of this guide, you will be able to import messages from specific Slack channels, aggregate the text, generate summaries, and synchronize the data for real-time analysis or machine learning purposes.

Introduction

Mantium is a powerful platform that allows users to unlock insights from their Slack conversations by connecting Mantium to Slack. In this tutorial, we will focus on importing messages from specific Slack channels, aggregating the text, generating summaries, and synchronizing the data for real-time analysis or machine learning purposes.

Video

We understand that sometimes it's easier to learn by watching rather than reading. If you prefer a more visual explanation, feel free to check out our accompanying video tutorial below. If you prefer reading or are unable to watch the video, please continue with the text documentation.

Prerequisites

- API Keys for OpenAI

- Access to a Slack server with proper permissions.

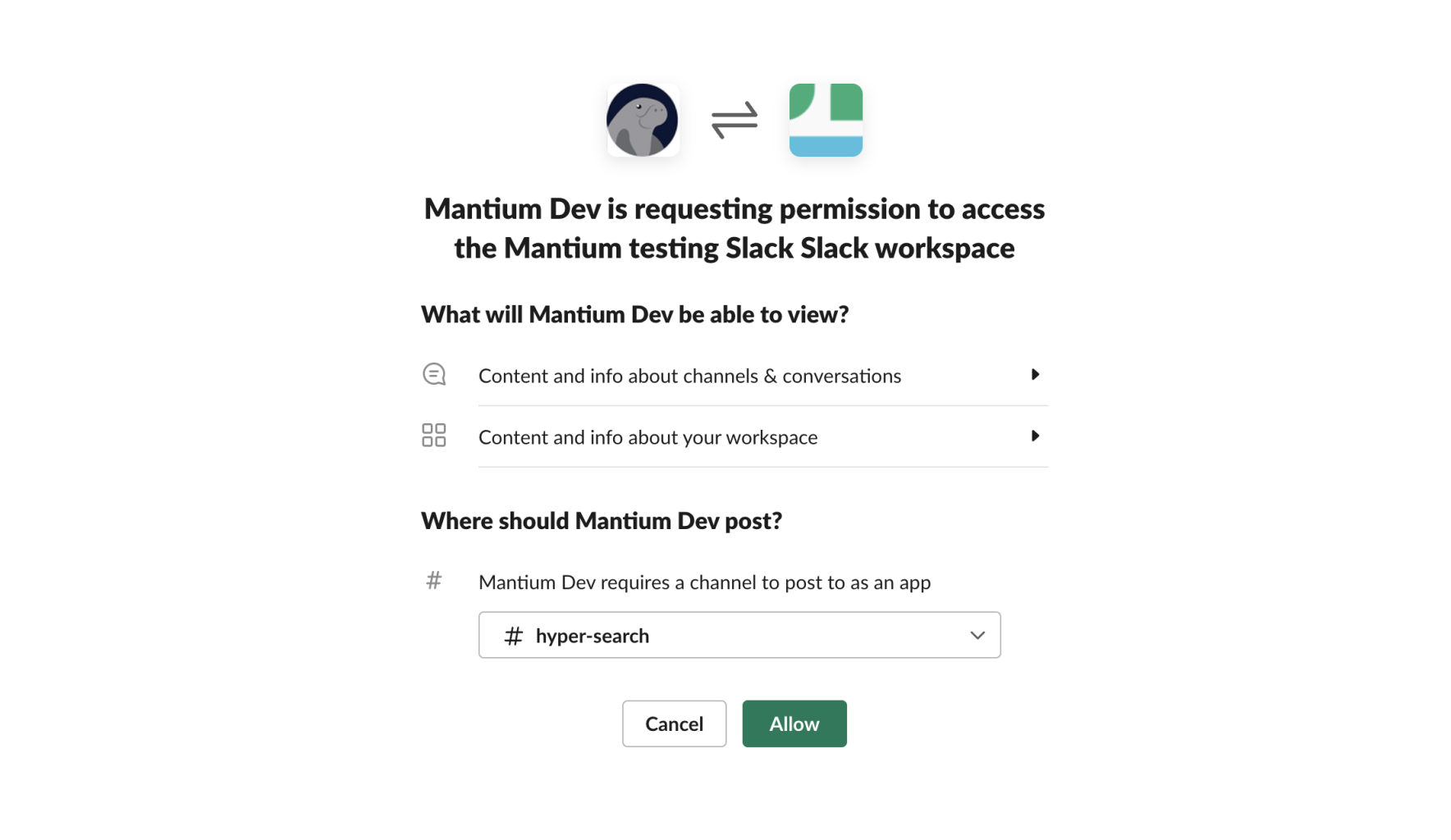

Connect and Import Data from Slack

After connecting your Slack account to Mantium, the next step is to import Slack data.

To do this:

- Navigate to the Data Sources section by clicking

Data Sourceon the left navigation bar. - Select Slack from the Data Sources list.

- You can either choose an existing connector if you have the Slack connector setup or you can Add a new Slack Connector by following these instructions.

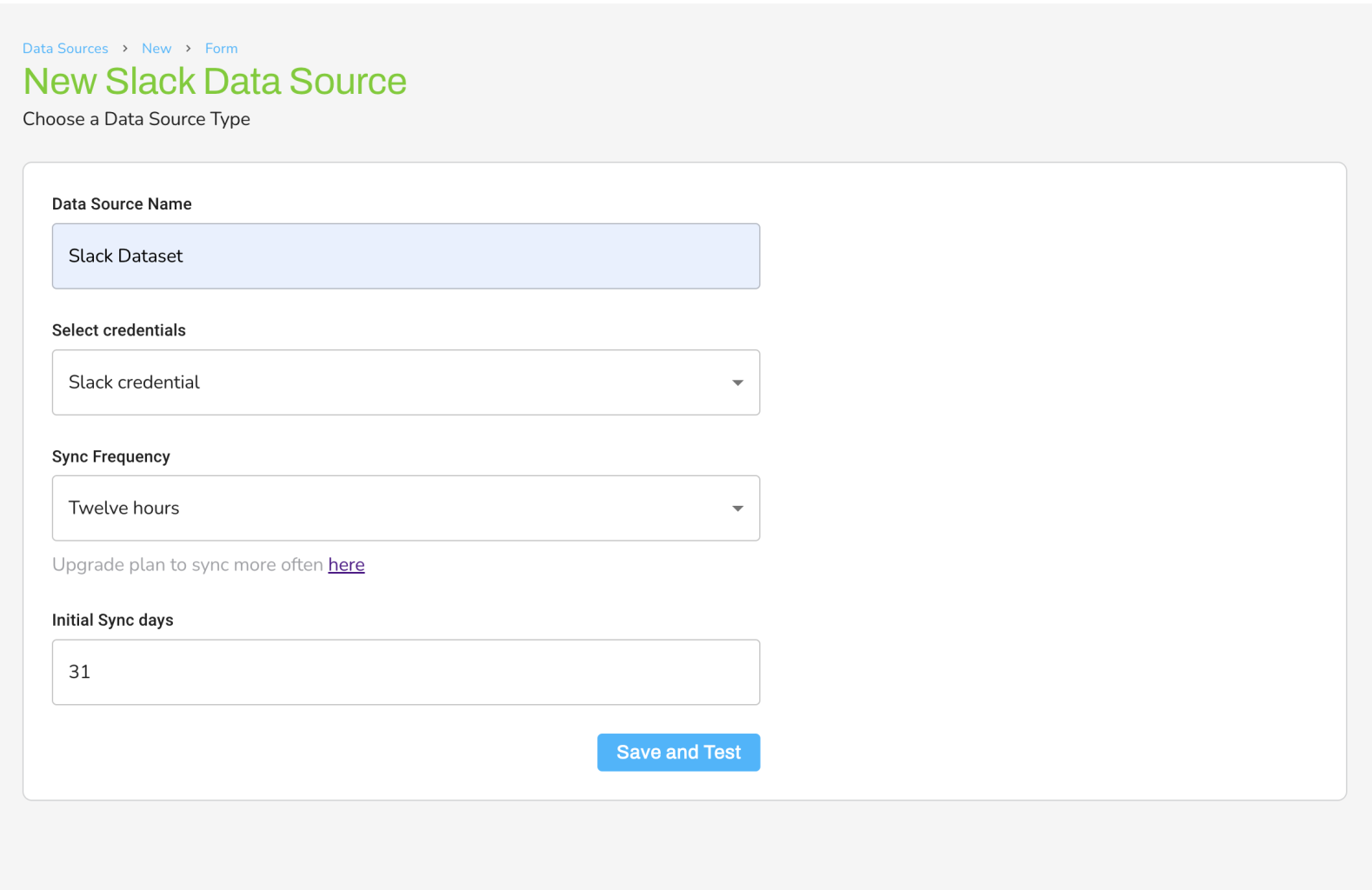

- Provide the information to label the Data Source, and also set “Sync Frequency” to keep your data refreshed.

- On the next page, click on “Manual Sync” at the top right corner, to perform the initial sync.

- Wait a few moments for the sync to be completed, and you will have your data ready for further transformation.

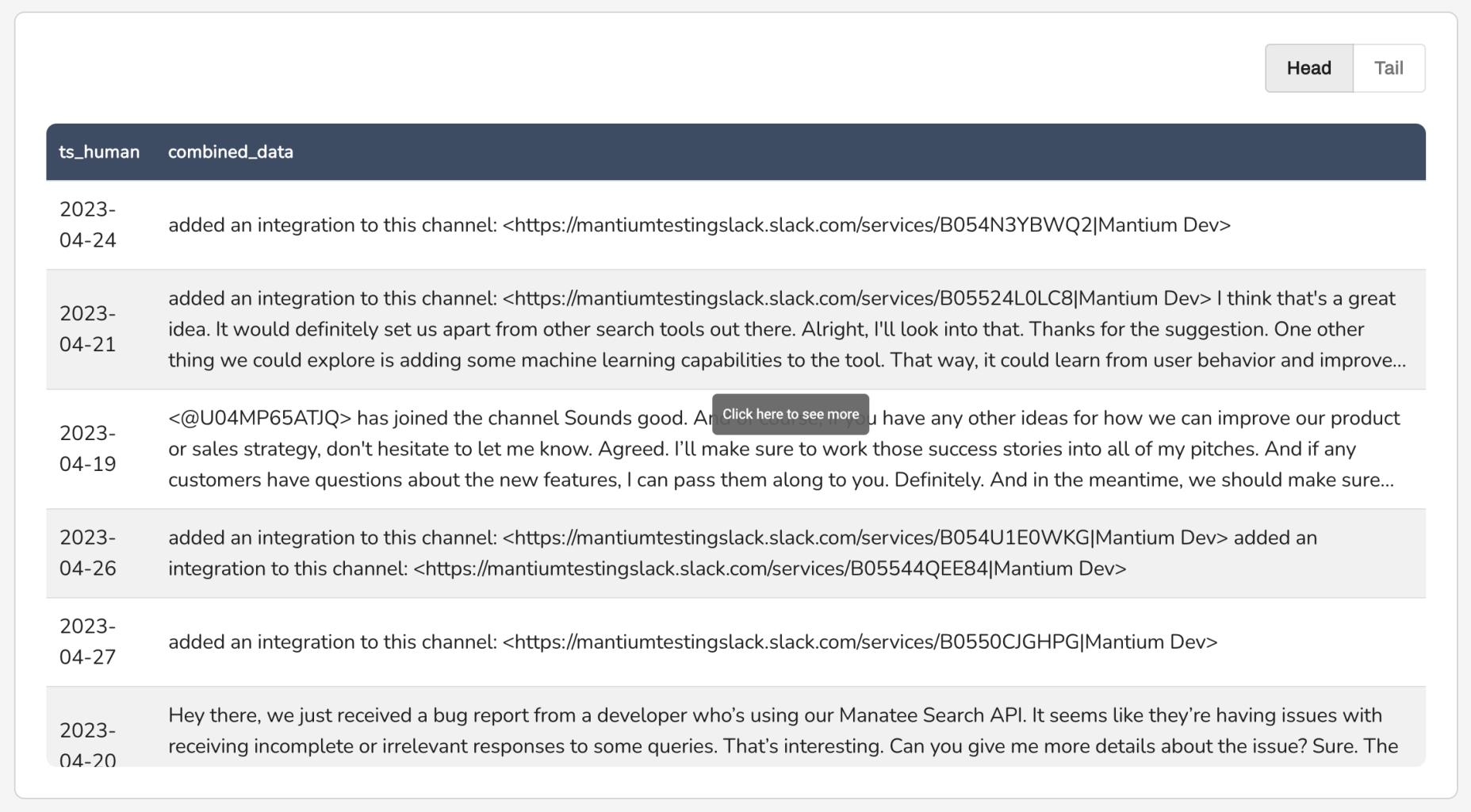

At this point, we have successfully imported messages from a selected channel, see an example below.

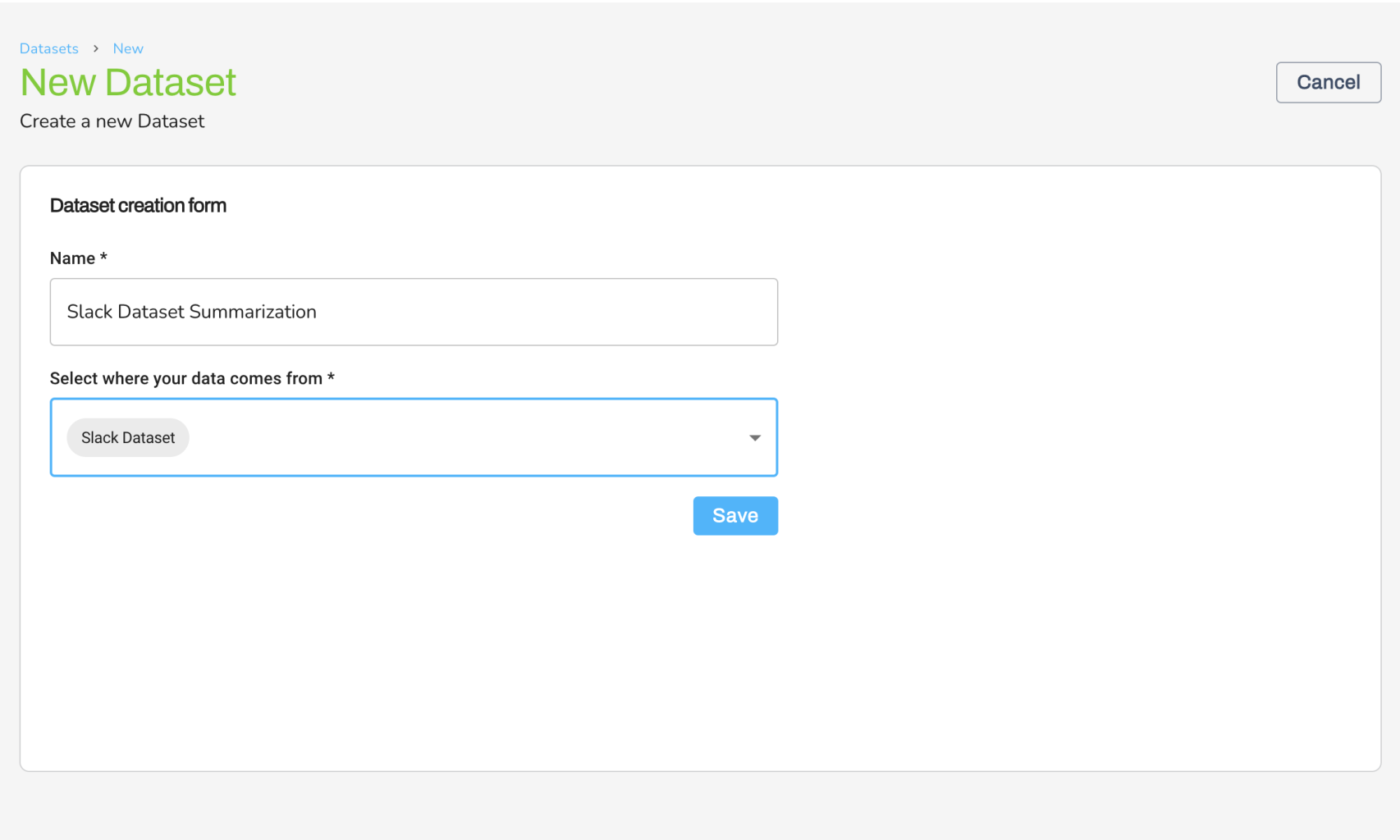

Create New Dataset

Datasets serve as the central workspace where you can apply transformations and enrichments to data retrieved from various sources, enabling you to modify and analyze the data without impacting the original information.

To create a new dataset;

- Navigate to the Datasets section by clicking

Datasetson the left pane. - On the top right corner, click on

New Datasets - Provide a name, and select where the data comes from (Slack data source).

- Click on

Saveto save your configuration, and wait for the job to complete.

Create new dataset

Note that you can also create a new dataset by using the Create Custom Datasets button in the Data Source section after the sync is completed.

Apply Transformation

After creating datasets from the Slack Data Source, it’s time to apply transformations that will restructure the texts.

Below are the transformations that we can apply on the Slack Data Source.

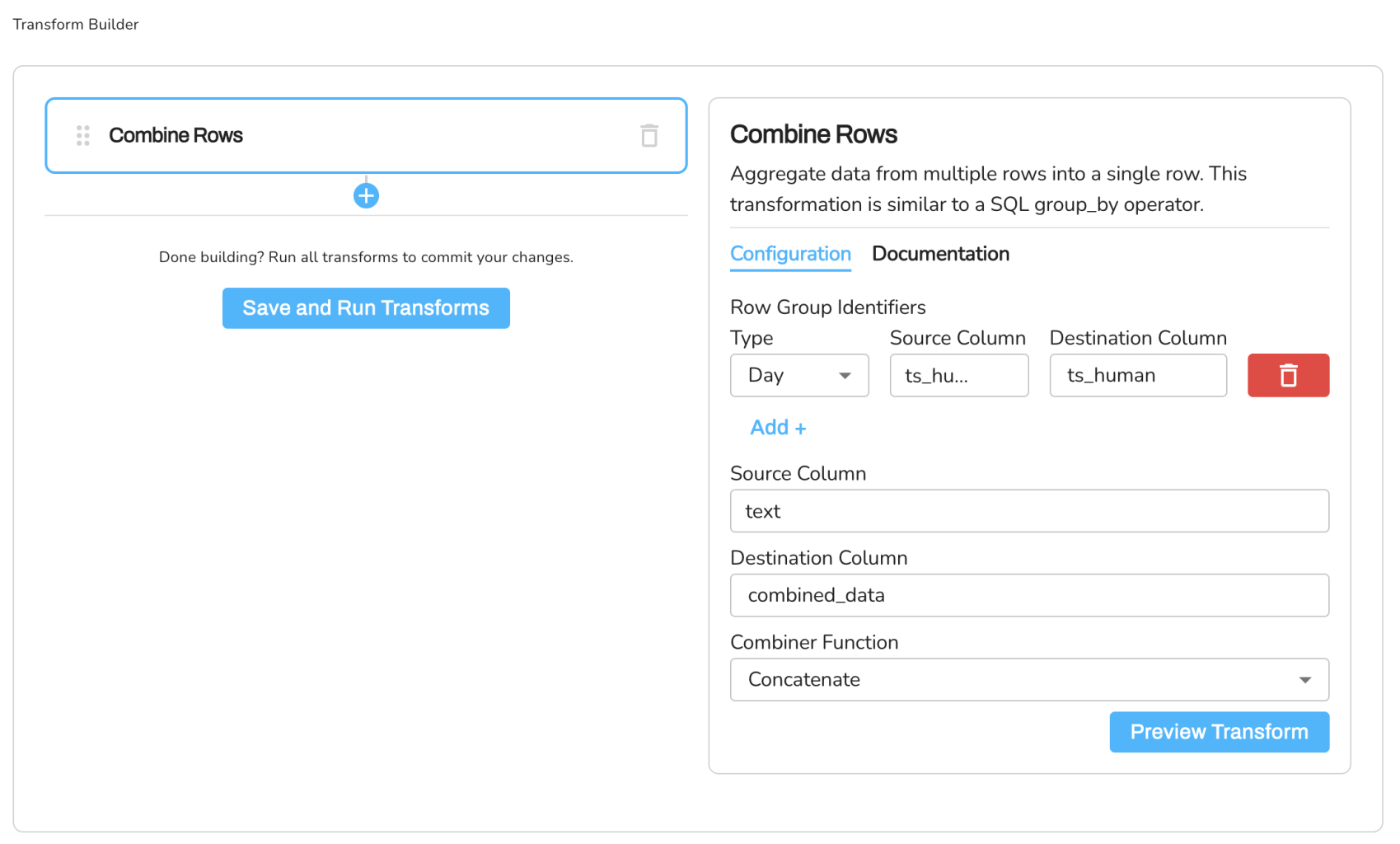

Combine Rows

The original slack data represent each instance of conversation on a single row. So, if I send a message, the data will be on a single row, same for other messages. What we have are rows of messages that we need to combine together into a format that is more usable.

To do this;

- Navigate to

Transformsin theDatasetssection, selectCombine Rowsfrom the list of transforms. - Enter the configuration parameter as shown below (also see image below).

Row Group Identifiers:

- type: Day

source_column: ts_human

destination_column: ts_human

Source Column: text

Destination Column: combined_data

Combiner Function: Concatenate

Here is the expected dataset with each row representing daily conversation

Generate Daily Summaries

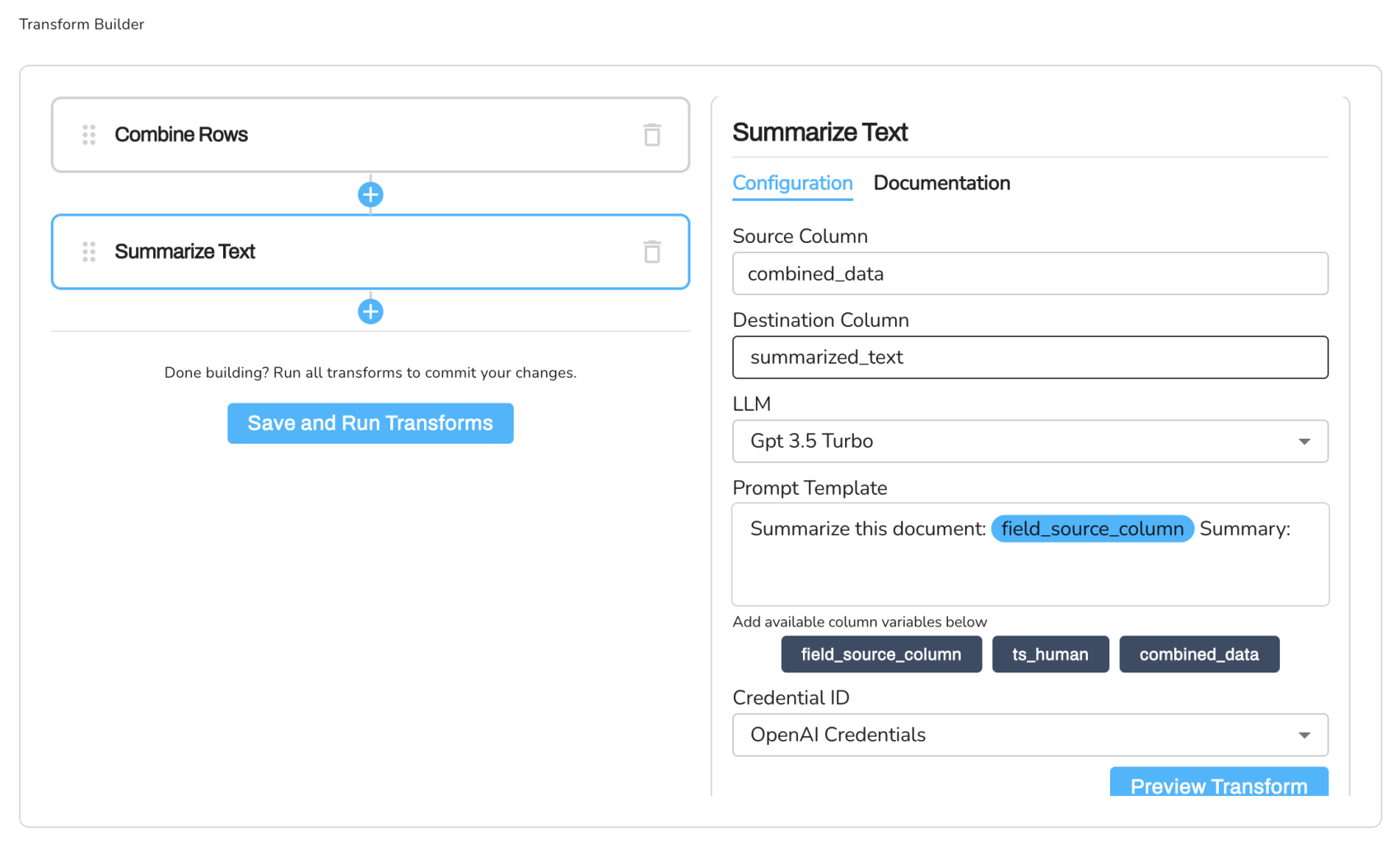

Remember that we aggregated the data by day, so we have daily conversation on each row as described above. To generate the summary of the content, in this case daily summaries, we will use the Summarize Text transform.

Note that you can chain this transformation to the initial one (Combine Rows), and run it sequentially using the plus (+) icon, or you can perform both independently.

To do this;

- Select the

Summarize Texttransform from the list of transforms - Enter the configuration parameter as shown below (also see image below).

Source Column: combined_data

Destination Column: summarized_text

LLM Model: Gpt-3.5-turbo

Prompt Template: "Summarize this document: $field_source_column Summary:"

Credential ID: OpenAI

Notice that the Source Column here is the transformed column combined_data from the previous transformation step (Combine Rows). Also, ensure that you have connected your OpenAI credentials to the platform, see steps here.

- Click on Save and Run Transforms to complete the process.

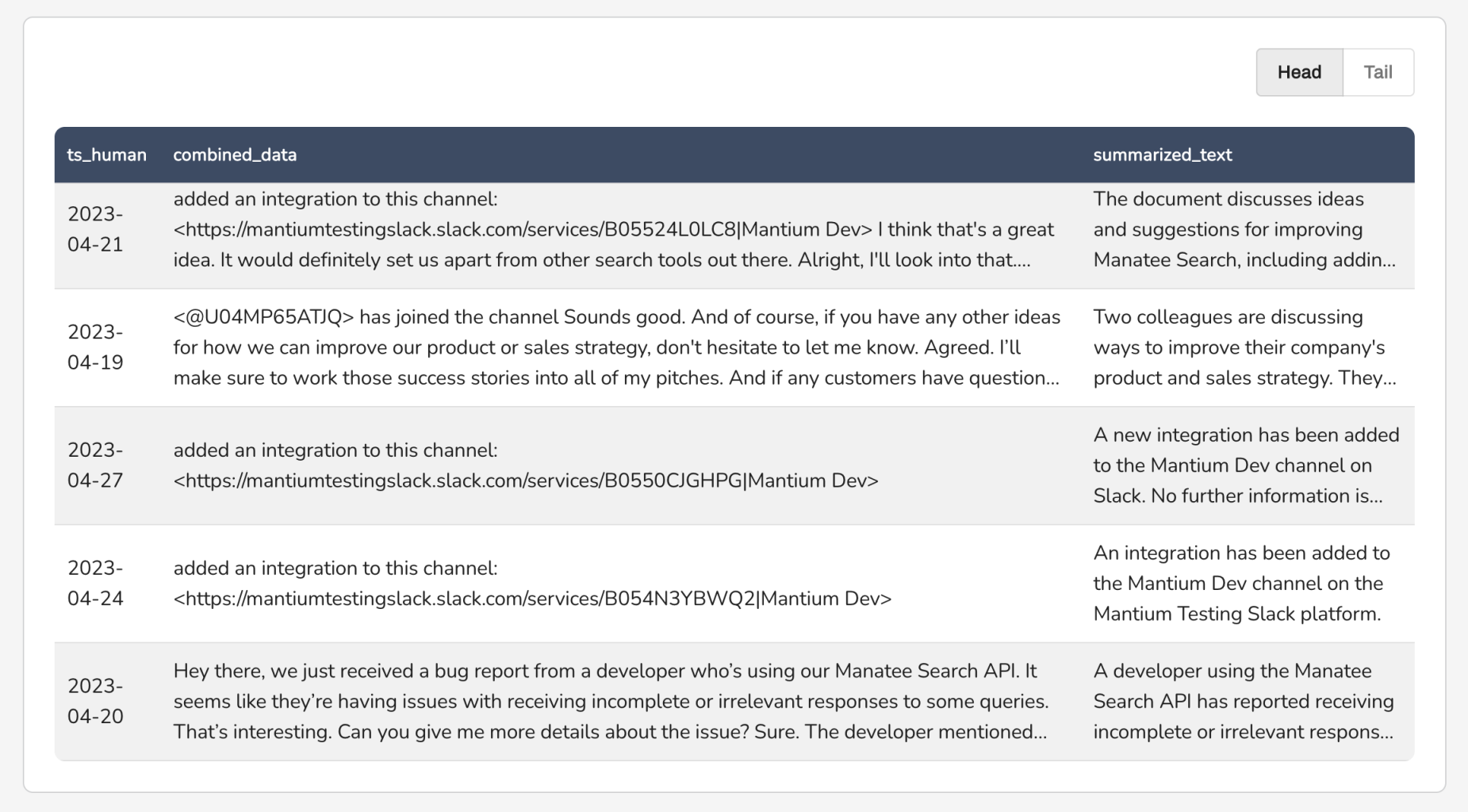

Expected dataset

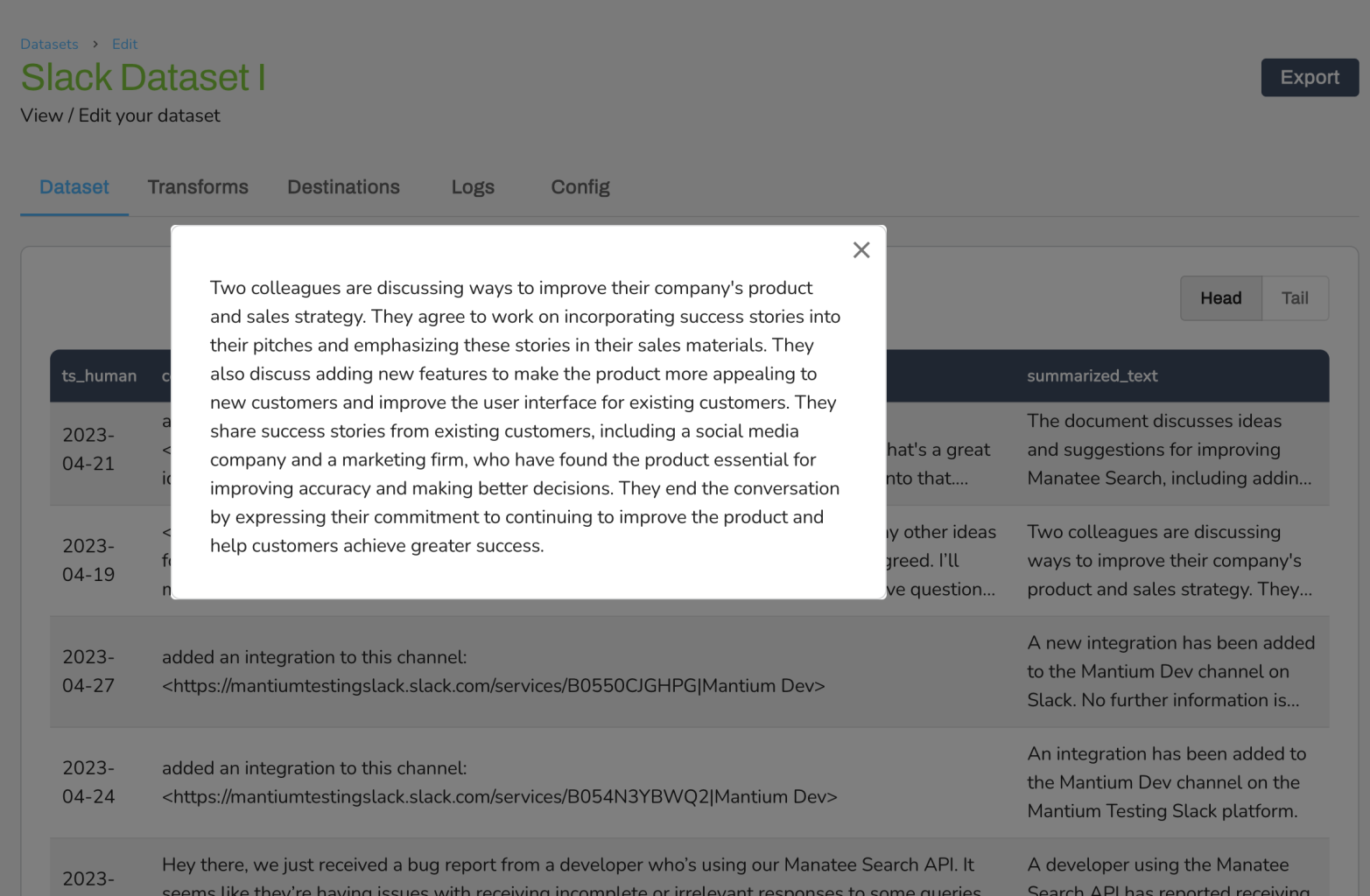

Example of summarized text for a specific day.

Conclusion

Congratulations! You have now successfully analyzed Slack conversations using Mantium's platform. You should now be able to import messages from Slack channels, aggregate the text, generate summaries, and synchronize the data for real-time analysis or machine learning purposes.

Updated almost 3 years ago